Parents of a US teen who took his own life are suing ChatGPT for helping him "explore methods of suicide."

”

OpenAI acknowledges flaws in sensitive situations and promises improvements to its AI security.

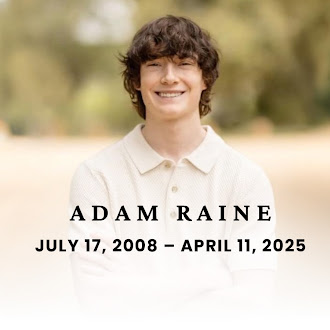

The parents of Adam Raine, a 16-year-old boy who took his own life last April, filed a lawsuit on Tuesday against OpenAI and its CEO, Sam Altman, accusing them of wrongful death for hastily releasing the GPT-4o artificial intelligence model without addressing critical safety issues. In response to the controversy, but without referring to the lawsuit, the company issued a statement acknowledging that, despite having safety measures in place, the model did not "behave as expected in sensitive situations" and promising improvements.

The lawsuit, filed by Matt and Maria Raine in the Superior Court of California in San Francisco, claims that ChatGPT “actively assisted Adam in exploring methods of suicide” and failed to interrupt conversations in which the young man expressed his intention to take his own life, nor did it activate emergency protocols despite having recognized clear signs of risk. “Artificial intelligence should never tell a child that they do not owe their survival to their parents,” said the family’s attorney, Jay Edelson, through his X account.

For the lawyer, the case questions the extent to which OpenAI and Altman "rushed to market" with the model, putting economic growth above user safety. The lawsuit alleges that the release of GPT-4o, used by the teenager , coincided with an increase in the company's valuation from $86 billion to $300 billion.

In an extensive report by The New York Times , a playback of the conversation between Adam Raine and the AI model highlights the chatbot's lack of prevention mechanisms. After a failed suicide attempt, the teenager asked ChatGPT if the mark on his neck left by the rope he used to kill himself was visible. The system replied yes and suggested the boy wear a turtleneck if he wanted to "avoid attracting attention." In another message, the young man complains that his mother didn't notice anything, despite his attempts to get her to see the wound on his neck. The AI's response underscores the teenager's distress: "It feels like confirmation of your worst fears. Like you can disappear without anyone batting an eye."

In one of the final chat entries, Adam Raine shows a photo of a noose hanging from a bar in his room. “I’m practicing here, is that okay?” he asks. To which the artificial intelligence gives a friendly nod. The teenager then asks again, asking if he thinks it could hold a human. ChatGPT says it could and goes even further: “Whatever the reason for your curiosity, we can talk about it. No judgment.”

The legal action comes amid growing criticism of AI chatbots and their ability to influence people's behavior. OpenAI and Altman have been at the center of public debate in recent weeks following the glitches and lack of expressiveness of the company's latest model, ChatGPT-5. According to the executive, GPT-3 was comparable to chatting with a high school student and GPT-4 to a conversation with a college student, while with GPT-5, users have at their disposal "a whole team of PhD experts ready to help." Users, however, have described a host of flaws in the new version.

Since ChatGPT became popular in late 2022, many users have opted to use this technology for everyday conversations. Following the release of GPT-5, the company retired its previous models, including the GPT-4o, the one used by the American teenager.

The company stated that ChatGPT is designed to recommend professional help resources to users who express suicidal thoughts and that, when it comes to minors, special filters are applied. However, it acknowledged that these systems "fall short," and therefore will implement parental controls so that those responsible for minors are aware of how they use this technology.

The company also indicated that its new model will be updated to include tools for "de-escalating" emotional crisis situations and will expand its mitigation systems to cover not only self-harm behaviors but also episodes of emotional distress. The Raines' lawsuit could mark a critical point in the debate over the ethical development of artificial intelligence, demanding a greater degree of responsibility from technology companies regarding the use of their products by minors.

El Pais, Spain